By-Example Synthesis of Vector Textures

Christopher Palazzolo, Oliver van Kaick, David Mould, Pacific Graphics, 2025Abstract

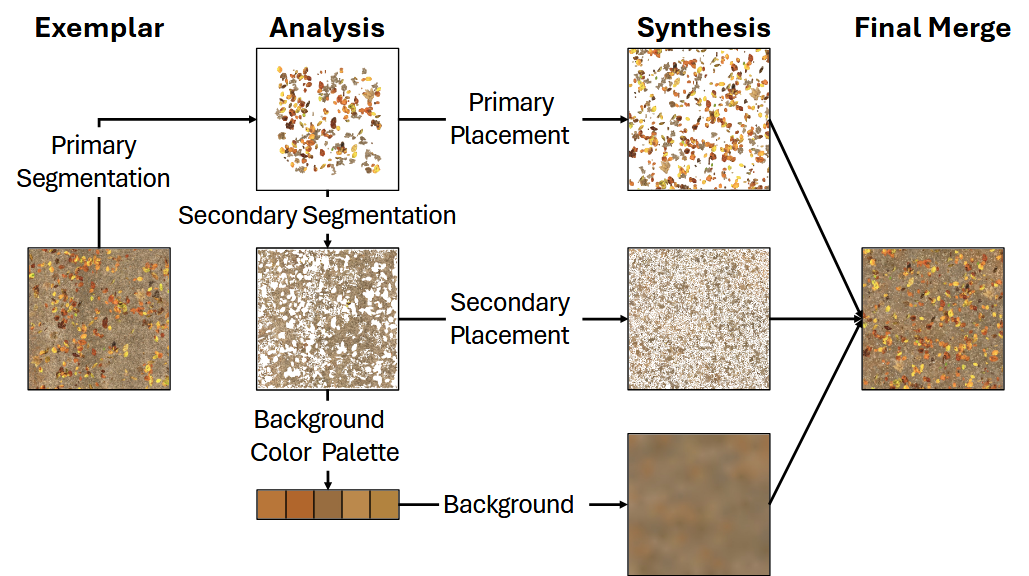

We propose a new method for synthesizing an arbitrarily sized novel vector texture given a single raster exemplar. In an analysis phase, our method first segments the exemplar to extract primary textons, secondary textons, and a palette of background colors. Then, it clusters the primary textons into categories based on visual similarity, and computes a descriptor to capture each texton's neighborhood and inter-category relationships. In the synthesis phase, our method first constructs a gradient field with a set of control points containing colors from the background palette. Next, it places primary textons based on the descriptors, in order to replicate a similar texton context as in the exemplar. The method also places secondary textons to complement the background detail. We compare our method to previous work with a wide range of perceptual-based metrics, and show that we are able to synthesize textures directly in vector format with quality similar to methods based on raster image synthesis.

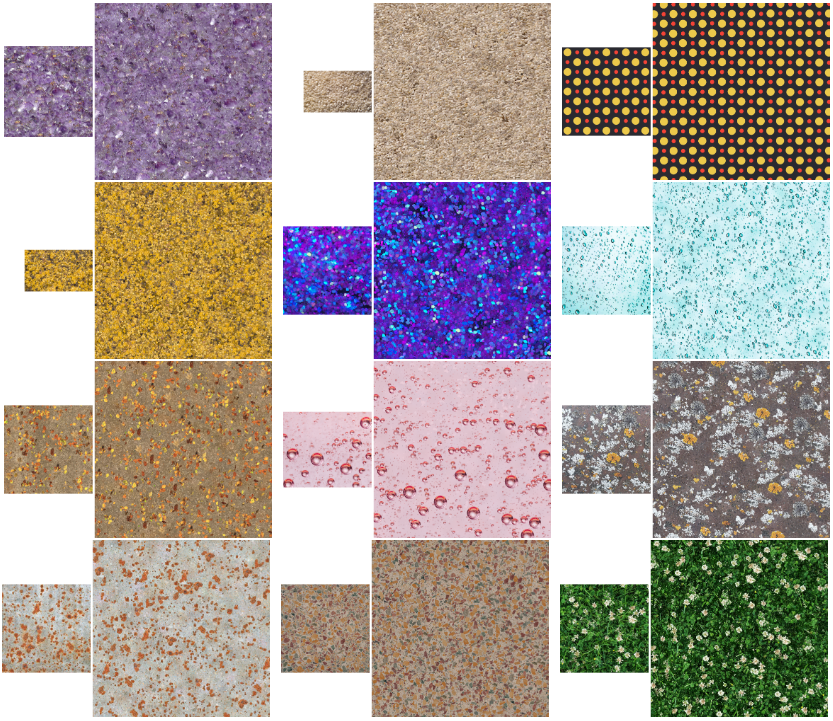

Results

Textures synthesized using our algorithm. Each texture pair shows a raster exemplar (left) and a synthetic vector image (right, rendered at 1000 x 1000). As a reminder, all of our results are pure vector comprised only of solid-colored polygons and a background gradient field.

Pacific Graphics 2025 Presentation

A recording of my presentation of the paper given at Pacific Graphics 2025 in Taipei city, Taiwan

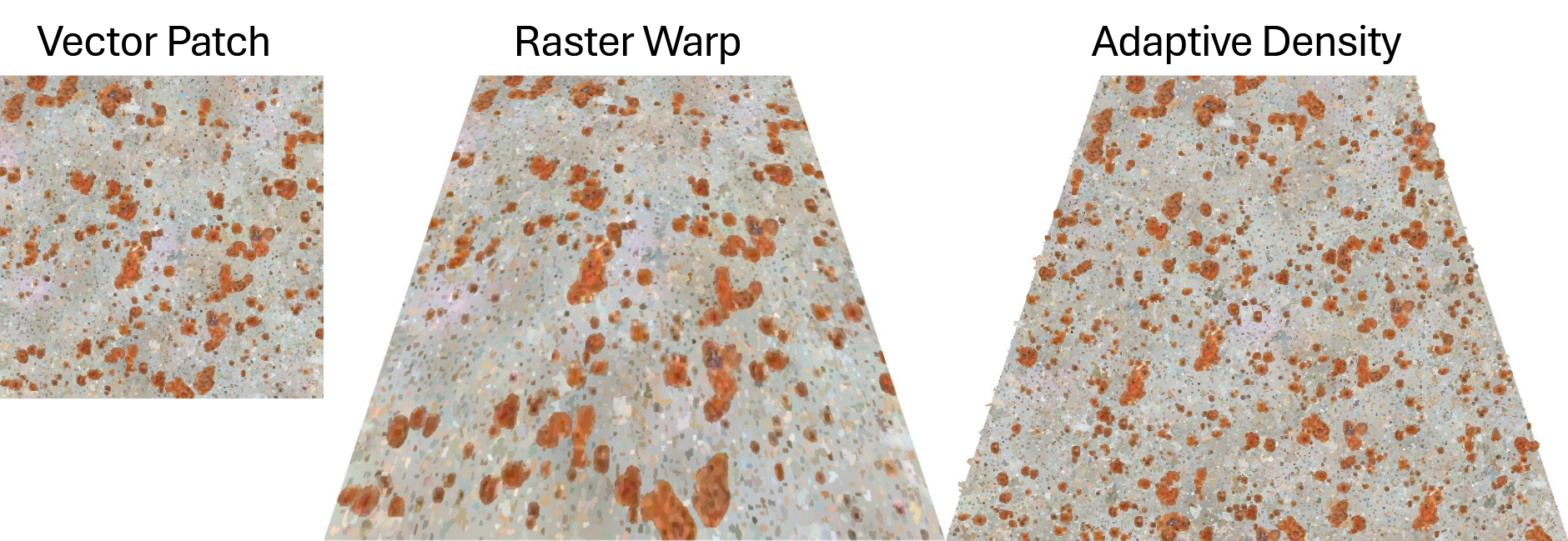

Vector Edits

Some editing operations that are easy to perform given vector textons, but would be more difficult on a raster image. Texture interpolation: the textons of one result (represented as solid-colored polygons) can move and warp into the textons of another. Adaptive density: the domain of the texture to increases in area while maintaining the density of the exemplar.

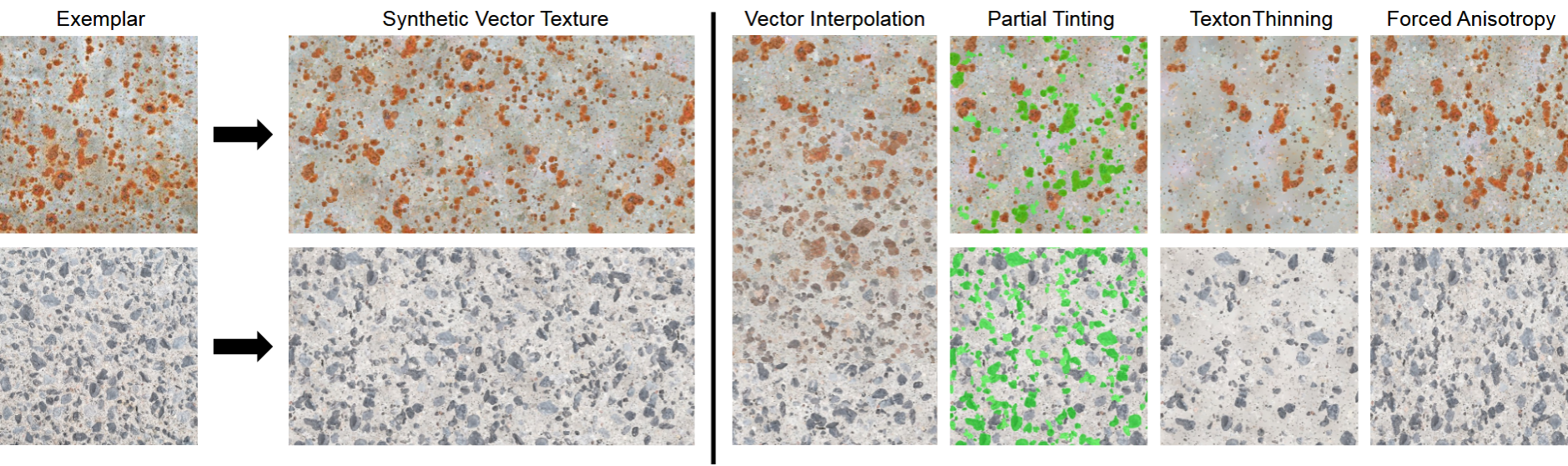

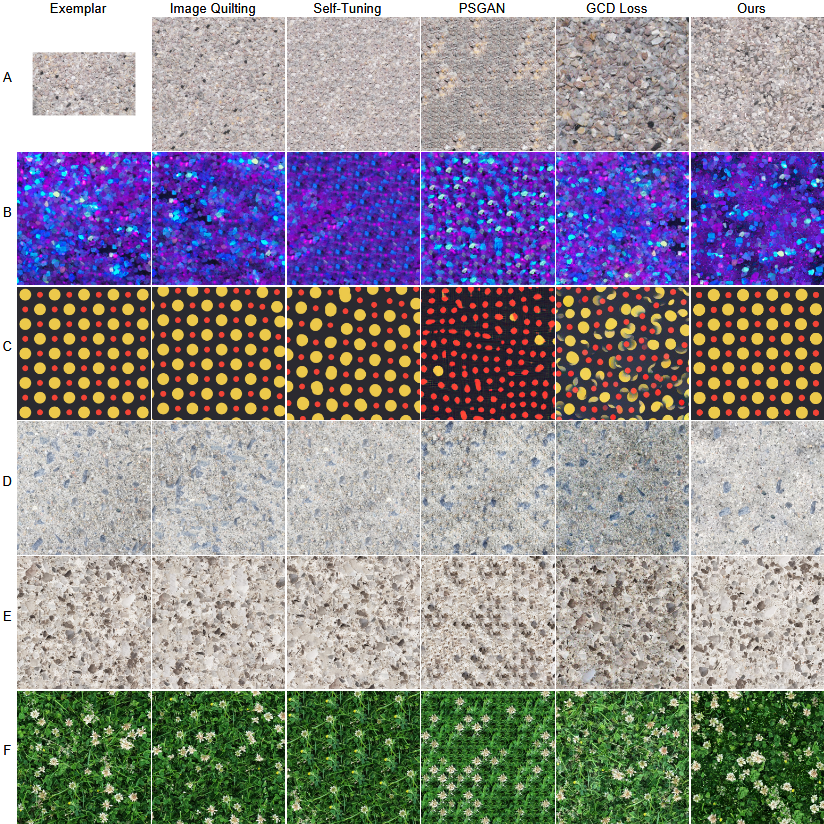

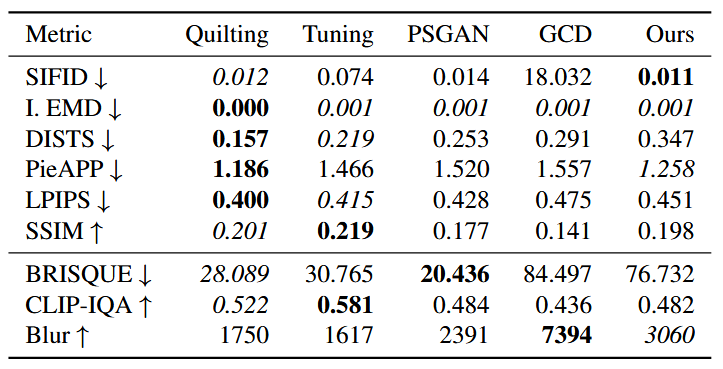

Comparison With SOTA

Our results compared to previous state-of-the-art methods. Our texture results are comparable with previous works while being vector, both visually and based on the metrics.

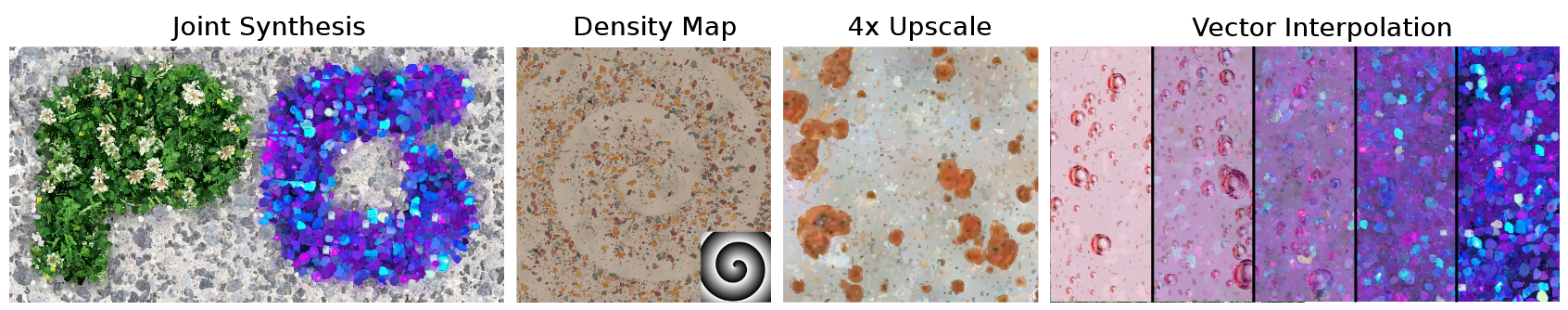

Methodology

A high-level overview of our texture synthesis algorithm.

Citation

@inproceedings{PALAZZOLO20251296,

booktitle = {Pacific Graphics Conference Papers, Posters, and Demos},

editor = {Christie, Marc and Han, Ping-Hsuan and Lin, Shih-Syun and Pietroni, Nico and Schneider, Teseo and Tsai, Hsin-Ruey and Wang, Yu-Shuen and Zhang, Eugene},

title = {{By-Example Synthesis of Vector Textures}},

author = {Palazzolo, Christopher and Kaick, Oliver van and Mould, David},

year = {2025},

publisher = {The Eurographics Association},

ISBN = {978-3-03868-295-0},

DOI = {10.2312/pg.20251296}

}

Acknowledgements

We thank the anonymous reviewers for their valuable feedback. This work was partially supported by the Natural Sciences and Engineering Research Council of Canada (NSERC) and Carleton University. We would also like to thank the members of the Graphics, Imaging, and Games Lab (GIGL) as well as our friends outside the lab for their suggestions and comments.